Research

Our research mainly involves understanding and generating human affective and social behaviors, such as emotions, empathy and rapport. Such technologies can be used to animate virtual humans or social robots. We aim to work towards technologies that do not replace but augment human-human interaction. We are also interested in studying the verbal and nonverbal behaviors associated with mental health disorders.

Behavior Generation

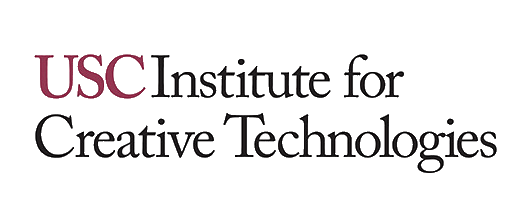

Generative modeling (Diffusion Models, GANs, etc.) of human social behaviors has broad applications aiming to replicate the complex dynamics of human interactions. Our lab’s recent work in this field leverages generative models to simulate human nonverbal behaviors and body movements. By employing a blend of generative models, e.g. Diffusion Model, VQ-GAN and etc, our research strives to capture the essence of how humans respond to and influence each other in social contexts. These models not only achieve unprecedented levels of lifelikeness and responsiveness in virtual agents but also open new avenues for applications in virtual reality, interactive media, and assistive technologies. By grounding these generative models in human-human interaction, we aim to bridge the gap between artificial representations and the authentic social behaviors, paving the way for artificial social intelligence.

Generative modeling (Diffusion Models, GANs, etc.) of human social behaviors has broad applications aiming to replicate the complex dynamics of human interactions. Our lab’s recent work in this field leverages generative models to simulate human nonverbal behaviors and body movements. By employing a blend of generative models, e.g. Diffusion Model, VQ-GAN and etc, our research strives to capture the essence of how humans respond to and influence each other in social contexts. These models not only achieve unprecedented levels of lifelikeness and responsiveness in virtual agents but also open new avenues for applications in virtual reality, interactive media, and assistive technologies. By grounding these generative models in human-human interaction, we aim to bridge the gap between artificial representations and the authentic social behaviors, paving the way for artificial social intelligence.

Representative publications

- M. Tran, D. Chang, M. Siniukov, M. Soleymani. DIM: Dyadic Interaction Modeling for Social Behavior Generation. European Computer Vision Conference (ECCV), Milan, Italy, 2024.

- D. Chang, Y. Shi, Q. Gao, H. Xu, J. Fu, G. Song, Q. Yan, Y. Zhu, X. Yang, M. Soleymani. MagicPose: Realistic Human Poses and Facial Expressions Retargeting with Identity-aware Diffusion. International Conference on Machine Learning (ICML), Vienna, Austria, 2024.

Emotion recognition

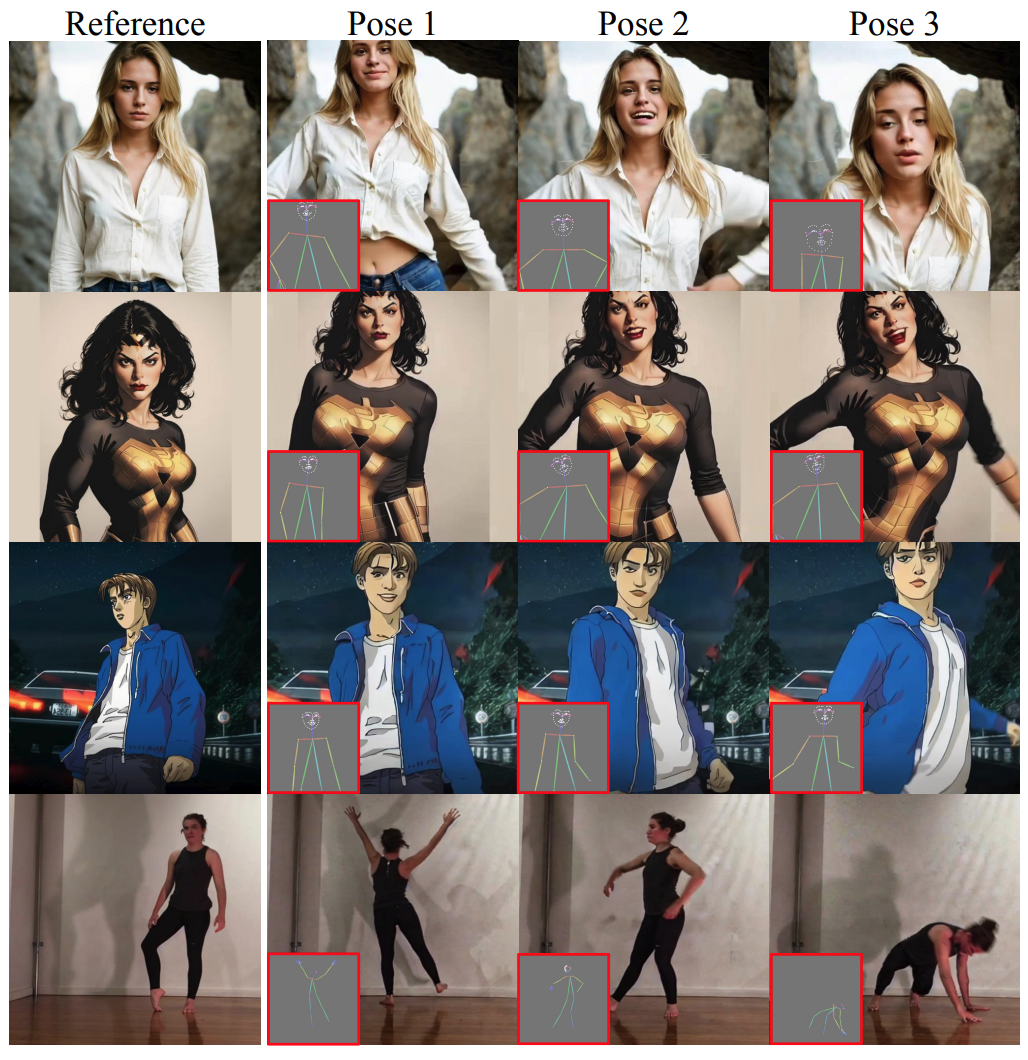

Emotions play a central role in shaping our behavior and guiding our decisions. Affective Computing strives to enable machines to recognize, understand and express emotions. Advancing automatic understanding of human emotions involves technical challenges in signal processing, computer vision and machine learning. The major technical challenges in emotion recognition include the lack of reliable labels, human variability and scarcity of labelled data. We have studied and evaluated machine-based emotion recognition methods from EEG signals, facial expression, pupil dilation and psychophysiological signals. Our past work demonstrated how behaviors associated cognitive appraisals can be used in an appraisal-driven framework for emotion recognition. We are also interested in understanding motivational components of emotions, including effort and action tendencies.

Representative publications

- Y. Yin, D. Chang, G. Song, S. Sang, T. Zhi, J. Liu, L. Luo, M. Soleymani. FG-Net: Facial Action Unit Detection with Generalizable Pyramidal Features. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, Hawaii, 2024.

- D. Chang, Y. Yin, Z. Li, M. Tran, M. Soleymani. LibreFace: An Open-Source Toolkit for Deep Facial Expression Analysis. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, Hawaii, 2024.

- Y. Yin, L. Lu, Y. Wu, M. Soleymani. Self-Supervised Patch Localization for Cross-Domain Facial Action Unit Detection. In 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG), 2021.

- Y. Yin, B. Huang, Y. Wu, M. Soleymani. Speaker-invariant adversarial domain adaptation for emotion recognition. 2020 International Conference on Multimodal Interaction (ICMI), 2020.

- S. Rayatdoost, M. Soleymani. Cross-Corpus EEG-Based Emotion Recognition, IEEE Machine Learning for Signals Processing (MLSP), Aalborg, Denmark, 2018.

- M. Soleymani, M. Mortillaro. Behavioral and Physiological Responses to Visual Interest and Appraisals: Multimodal Analysis and Automatic Recognition, Frontiers in ICT, 5(17), 2018.

- M. Soleymani, S. Asghari-Esfeden, Y. Fu, M. Pantic. Analysis of EEG Signals and Facial Expressions for Continuous Emotion Detection, IEEE Transactions on Affective Computing, 7(1): pp. 17-28, 2016.

Computational mental health

The emerging field of computational mental health aspires to leverage the recent advancements in human-centered artificial intelligence to assist human care in delivering mental health support. For example, automatic human behavior tracking can be used to train psychotherapists and detect behavioral markers associated with mental health. Identification of important behavioral biomarkers that can track the severity of mental health disorders and identify effective therapeutic behavior can assist the treatment and track the outcomes. We study and develop machine-based methods for automatic assessment of mental health disorders (PTSD, depression).

We also research and develop computational frameworks that to jointly analyze verbal, nonverbal, and dyadic behavior to better predict therapeutic outcome in motivation interviewing.

Representative publications

- M. Tran, E. Bradley, M. Matvey, J. Woolley, M. Soleymani. Modeling Dynamics of Facial Behavior for Mental Health Assessment. In 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), pp. 1-5. IEEE, 2021.

- L. Tavabi, L. Stefanov, B. Borsari, J.D. Woolley, S. Scherer, M. Soleymani. Multimodal Automatic Coding of Client Behavior in Motivational Interviewing, ACM Int’l Conference on Multimodal Interaction (ICMI), 2020.

- F. Ringeval et al. AVEC 2019 workshop and challenge: state-of-mind, detecting depression with AI, and cross-cultural affect recognition. InProceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop 2019 Oct 15 (pp. 3-12). ACM.

- L. Tavabi. Multimodal Machine Learning for Interactive Mental Health Therapy. In 2019 International Conference on Multimodal Interaction, 2019. ACM.

Multimodal human behavior sensing

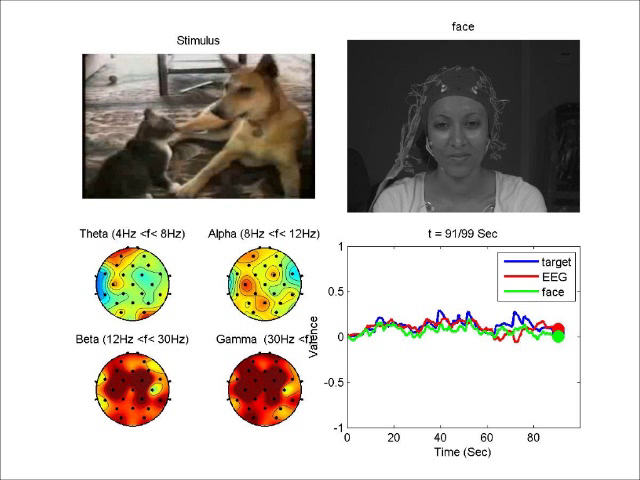

OpenSense is a platform for real-time multimodal acquisition and recognition of social signals. OpenSense enables precisely synchronized and coordinated acquisition and processing of human behavioral signals. Powered by the Microsoft’s Platform for Situated Intelligence, OpenSense supports a range of sensor devices and machine learning tools and encourages developers to add new components to the system through straightforward mechanisms for component integration. This platform also offers an intuitive graphical user interface to build application pipelines from existing components. OpenSense is freely available for academic research.

Understanding social and relational from verbal and nonverbal behavior is another topic of interest. We are conducting research to understand the behavior associated with empathy, self-disclosure and rapport, to build nonverbal behavior generation for interactive virtual humans.

Representative publications

- M. Tran, Y. Kim, C.-C. Su, M. Sun, C.-H. Kuo, M. Soleymani. Exo2Ego: A Framework for Adaptation of Exocentric Video Masked Autoencoder for Egocentric Social Role Understanding, European Computer Vision Conference (ECCV), Milan, Italy, 2024.

- L. Tavabi, K. Stefanov, S. Nasihati Gilani, D. Traum, M. Soleymani. Multimodal Learning for Identifying Opportunities for Empathetic Responses. In 2019 International Conference on Multimodal Interaction, 2019. ACM.

- M. Soleymani, K. Stefanov, S-H. Kang, J. Ondras, J. Gratch. Multimodal Analysis and Estimation of Intimate Self-Disclosure. In 2019 International Conference on Multimodal Interaction, 2019. ACM.

- M. Soleymani, M. Riegler, P. Halvorsen. Multimodal Analysis of Image Search Intent, ACM Int’l Conf. Multimedia Retrieval (ICMR), Bucharest, Romania, 2017.

Multimedia computing

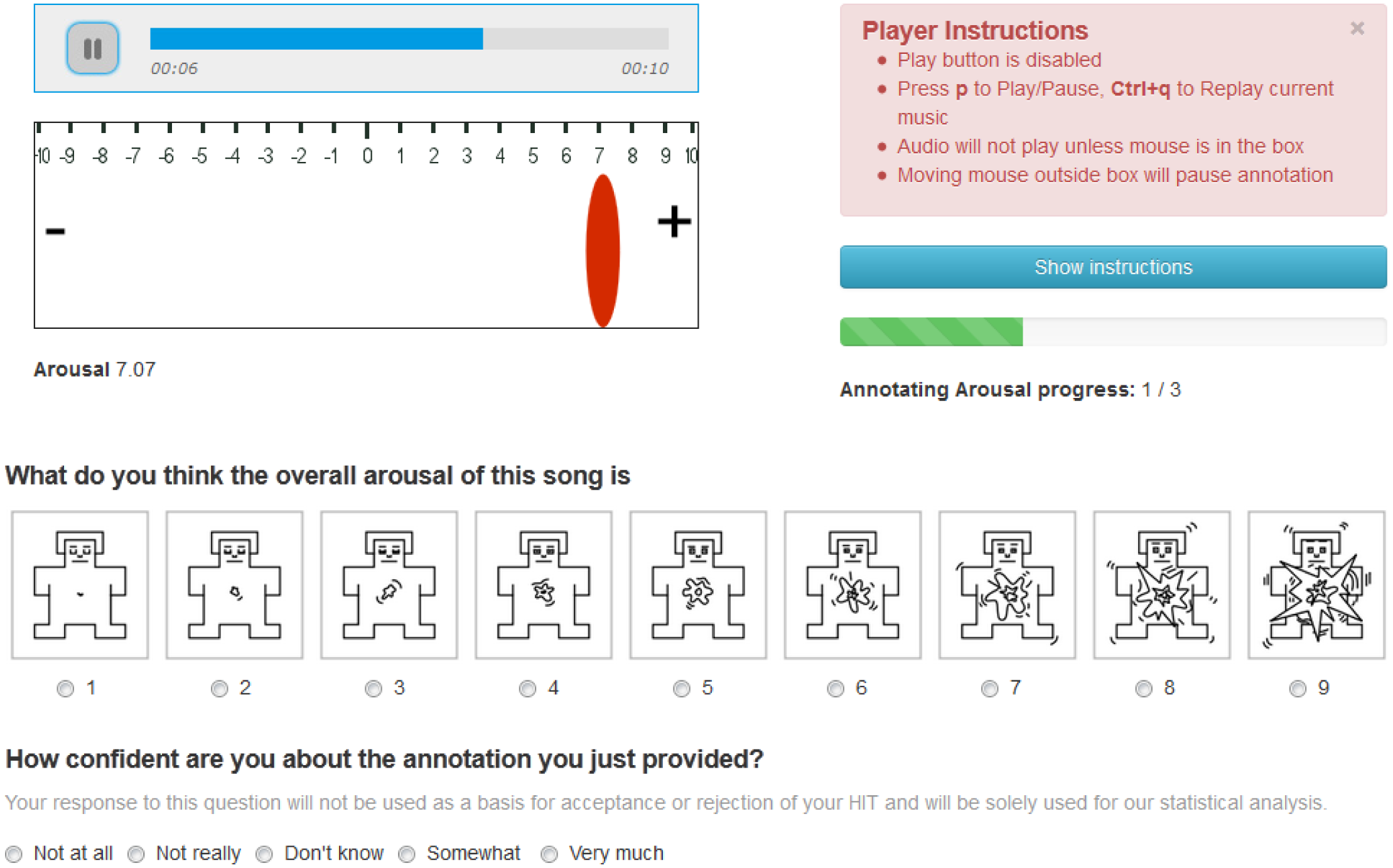

Understanding subjective multimedia attributes can help improve user experience in multimedia retrieval and recommendation systems. We have been active in developing new methods for computational understanding of subjective multimedia attributes, e.g., mood and persuasiveness. Development of such methods requires a thorough understanding of these subjective attributes to pursue the best strategies in creating databases and solid evaluation methodologies. We have developed multimedia databases and evaluation strategies through user-centered data labeling and evaluation at scale through crowdsourcing. We are also interested in attributes that are related to human perception, e.g., melody in music, comprehensiveness in visual content. Recent examples of this track of research include acoustic analysis for music mood recognition and a micro-video retrieval method with implicit sentiment association between visual content and language.

Representative publications

- Y. Song, M. Soleymani, Polysemous Visual-Semantic Embedding for Cross-Modal Retrieval, Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, 2019.

- A. Aljanaki, M. Soleymani. A data-driven approach to mid-level perceptual musical feature modeling, 19th Conference of the International Society for Music Information Retrieval (ISMIR 2018), Paris, France, 2018.

- A. Aljanaki, Y.-H. Yang, M. Soleymani. Developing a Benchmark for Emotional Analysis in Music, PLOS ONE, 12(3):e0173392 , 2017.